How AI is Perfecting Lip Sync in Animation: The Future of Dialogue is Here

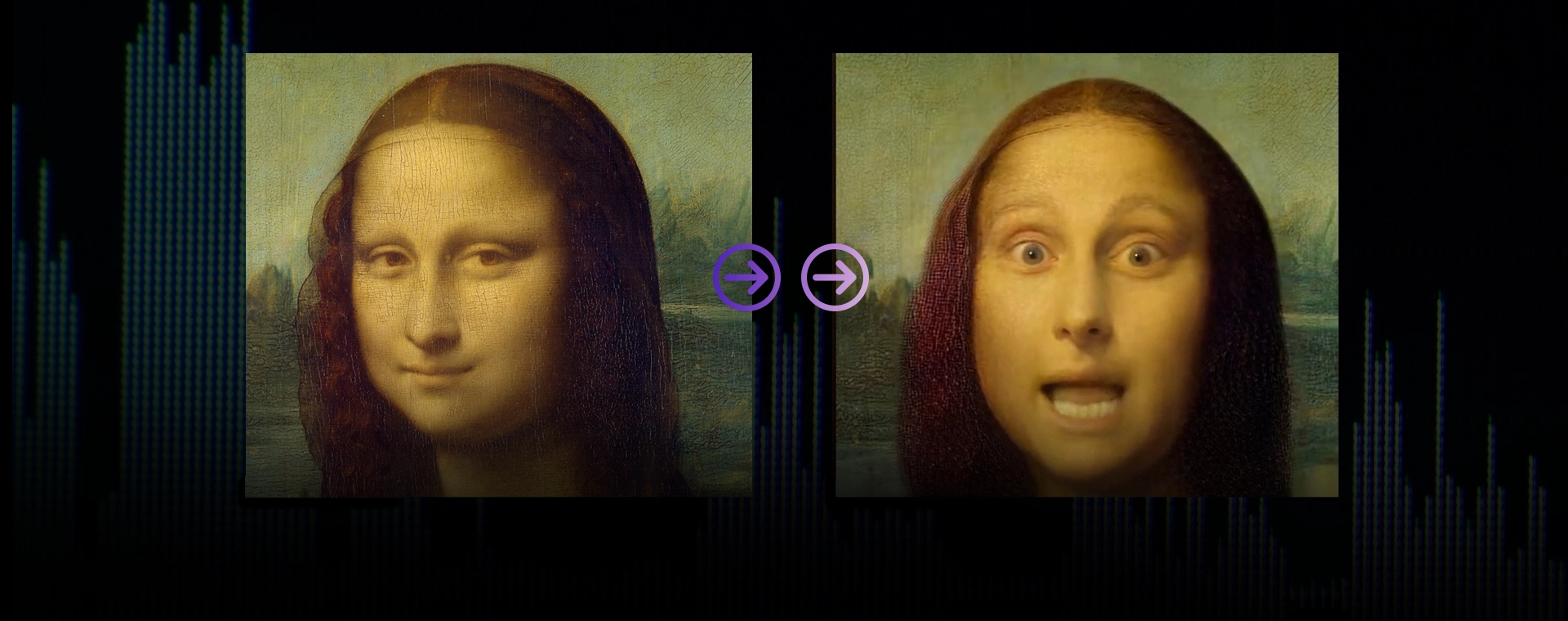

In a world where digital characters speak, blink, and express just like real humans, one detail has always stood out: the lip sync. That awkward mismatch between audio and mouth movement has long been the Achilles heel of animation and dubbing.

But not anymore. Thanks to machine learning and artificial intelligence, lip-syncing in animation has reached astonishing levels of realism, transforming how films, games, and virtual avatars communicate with audiences worldwide.

What Is AI Lip Syncing?

AI-driven lip sync refers to using machine learning models to generate accurate mouth movements that align perfectly with spoken dialogue. Whether it’s a cartoon character, a video game NPC, or a digital avatar, AI can now animate lips and facial expressions to match the audio with stunning precision. Unlike traditional methods, which required painstaking manual work by animators, AI does this in real time or near-instantaneously—making it not only faster but also more scalable and cost-effective.

The Rise of Machine Learning in Animation

Recent years have brought groundbreaking advancements in AI lip sync. Technologies like NVIDIA's Audio2Face and Epic Games' MetaHuman Animator are leading the charge. Audio2Face, for instance, can analyze a voice clip and animate a 3D face with synchronized lips and expressions in real time. According to NVIDIA, the tool has already been integrated into their Omniverse platform and is used in both gaming and film studios to speed up production cycles.

Another industry milestone is Wav2Lip, developed by researchers at IIIT Hyderabad. It achieved speaker-independent, high-accuracy lip-syncing by training on thousands of videos. Since its release, Wav2Lip has inspired a wave of new models, such as StyleSync, DiffSpeaker, and VividWav2Lip—each improving upon fidelity, emotion retention, and synchronization accuracy. A user study on VividWav2Lip showed that 85% of viewers preferred the AI-generated sync over older methods, citing better realism and emotion matching.

Why It Matters: Statistics That Speak

The adoption of AI lip-sync technology isn’t just a trend—it’s backed by hard numbers:

-

Studios using AI lip-sync tools have reported up to 80% reduction in animation production time.

-

Meta's use of Temporal Convolutional Networks improved viseme prediction accuracy by over 30%, enhancing live avatar realism on platforms like Horizon Worlds.

-

Real-time systems like Oculus Lipsync achieve latency as low as 10–50ms, making them effectively seamless to users (Meta, 2023).

-

In film production, AI dubbing tools such as TrueSync have helped directors save tens of thousands of dollars per scene by eliminating the need for reshoots.

Real-World Applications

1. Film and TV Dubbing

Imagine watching a foreign movie where the actor's lips match the dubbed audio perfectly. That’s now possible with TrueSync, a groundbreaking tool by Flawless AI. The technology was famously used in the 2022 thriller Fall to replace explicit dialogue without reshooting scenes. Director Scott Mann said, “It was a genius move. We preserved the actor’s performance while aligning with rating guidelines”.

2. Video Games

Gaming giants like Square Enix have embraced AI for real-time lip-sync. In Final Fantasy VII Rebirth, a proprietary system called Lip-Sync ML allowed the development team to achieve cutscene-level quality in regular gameplay. Developers noted that this approach not only saved production time but also enabled multilingual support across global markets.

3. Virtual Avatars and Metaverse Communication

Whether it’s a VTuber on YouTube or a metaverse avatar in a business meeting, real-time AI lip sync enables digital characters to mimic human speech naturally. Meta’s Oculus Lipsync predicts 15 viseme classes from audio input, rendering speech visually even in noisy environments. According to a Meta research report, “Accurate real-time viseme prediction is foundational to immersive social VR experiences”.

4. Content Creation & Social Media

Platforms like HeyGen, Synthesia, and D-ID are empowering content creators with simple tools to create AI-driven talking head videos. From e-learning modules to Instagram reels, creators can now generate multilingual, emotionally expressive content in minutes. This is especially powerful for influencers aiming to expand their audience base globally.

The Technology Behind the Magic

These advancements are built on the backs of powerful AI models and vast datasets. The key technologies include:

-

Generative Adversarial Networks (GANs): Used in Wav2Lip and TrueSync to generate highly realistic lip motion.

-

Transformers and Diffusion Models: Powering newer models like DiffSpeaker for context-aware, expressive lip-sync.

-

Multimodal Neural Networks: Combining audio, visual, and emotional data for richer animation.

-

Real-Time Neural Rendering: Seen in NVIDIA’s Audio2Face, offering instant feedback and high scalability.

Training datasets such as LRS2, LRS3, GRID, and VoxCeleb2 offer thousands of hours of audio-visual material, allowing these systems to generalize across languages, accents, and identities.

Voices of Authority: What the Experts Say

"We're steadily approaching the point where lip-sync technology becomes virtually indistinguishable from reality. Teeth and beards remain challenging, but progress is rapid," says Dmitry Rezanov, co-founder of Rask AI.

"Effective lip sync is comparable to great CGI—when executed perfectly, it becomes invisible," notes a spokesperson from Speech Graphics, a leader in real-time facial animation for games.

Even industry pioneers like John Lasseter, co-founder of Pixar, once said, “The art challenges the technology, and the technology inspires the art.” That philosophy is now more relevant than ever as AI begins to shape animation from the inside out.

The Future of Dialogue in Animation

As we move toward 2026 and beyond, AI lip-sync technology will become even more advanced, with models capable of:

-

Translating dialogue across languages with native-level lip motion.

-

Capturing subtle emotional nuances like sarcasm or fear.

-

Delivering real-time, interactive avatars in AR/VR with zero latency.

Final Thoughts

Animation studios, game developers, content creators, and educators are already reaping the benefits. With production times slashed, costs reduced, and quality improved, the only question that remains is: How will you use this technology to bring your characters to life?

In an age where attention spans are short and competition is global, getting lip-sync right is more than just a detail—it’s a differentiator. AI is no longer a futuristic dream; it’s a vital tool in the storyteller’s toolkit.